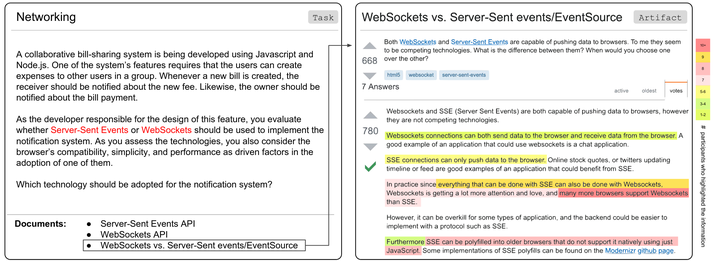

Information relevant to a task based on the number of highlights made by study participants.

Information relevant to a task based on the number of highlights made by study participants.

Abstract

To complete a software development task, a software developer often consults artifacts which mostly consist of natural language text, such as API documentation, bug reports, and Q&A forums. Not all information within these artifacts is relevant to a developer’s current task, forcing them to filter through large amounts of irrelevant information, a frustrating and time-consuming activity. Since failing to locate relevant information may lead developers to incorrect or incomplete solutions, many approaches attempt to automatically extract relevant information from natural language artifacts. However, existing approaches are able to identify relevant text only for certain types of tasks and artifacts. To explore how these limitations could be relaxed, we conducted a controlled experiment in which we asked 20 software developers to examine 20 natural language artifacts consisting of 1,874 sentences and highlight the text they considered relevant to six software development tasks. Although the 2,463 distinct highlights participants created indicate variability in the perceived relevance of the text, the information considered key to completing the tasks was consistent. We observe consistency in the text using frame semantics, an approach that captures the key meaning of sentences, suggesting that frame semantics can be used in the future to automatically identify task-relevant information in natural language artifacts.